Artificial intelligence systems (AI) are increasingly finding their way into medical practice. They have been used to assist pathologists in screening microscope specimens for years. Although still amazingly complicated, one of the most obvious applications for trauma is in reading x-rays. Counting rib fractures may be helpful for care planning, and characterizing fracture patterns may assist our orthopedic colleagues in evaluating and planning rib plating procedures.

The trauma group at Stanford developed a computer vision system to assist in identifying fractures and their percent displacement. They used a variation on a neural network deep learning system and trained it on a publicly available CT scan dataset. They used an index of radiographic similarity (DICE score) to test how well their model matched up against the reading of an actual radiologist.

Here are the factoids:

- The AI network was trained on a dataset of 5,000 images in 660 chest CT scans that had been annotated by radiologists

- The model achieved a DICE score of 0.88 after training

- With a little jiggering of the model (reweighting), the receiver operating characteristic curve improved to 0.99, which is nearly perfect

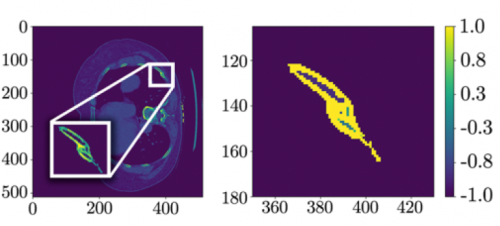

The left side shows a CT scan rotated 90 degrees; the right side shows the processed data after a fracture was detected.

Bottom line: This paper describes what lies ahead for healthcare in general. The increasing sophistication and accuracy of AI applications will assist trauma professionals in doing their jobs better. But rest easy, they will not take our jobs anytime soon. What we do (for the most part) takes very complex processing and decision making. It will be quite some time before these systems can do anything more that augment what we do.

Expect to see these AI products integrated with PACS viewing systems at some point in the not so distant future. The radiologist will interpret images in conjunction with the AI, which will highlight suspicious areas on the images as an assist. The radiologist can then make sure they have reported on all regions that both they and the AI have flagged.

Here are my questions and comments for the presenter/authors:

- How can you be sure that your model isn’t only good for analyzing your training and test datasets? If neural networks are overtrained, they get very good at the original datasets but are not so good analyzing novel datasets. Have you tried the on your own data yet?

- Explain what “class reweighting” is and how it improved your model. I presume you used this technique to compensate for the potential issue mentioned above. But be sure to explain this in simple terms to the audience.

- Don’t lose the audience with the net details. You will need to give a basic description of how deep learning nets are developed and how they work, but not get too fancy.

This is an interesting glimpse into what is coming to a theater near you, so to speak. Expect to see applications appearing in the next few years.

Reference: AUTOMATED RIB FRACTURE DETECTION AND CHARACTERIZATION ON COMPUTED TOMOGRAPHY SCANS USING COMPUTER VISION. EAST 2023 Podium paper #16.